Key Takeaways

- ISO/IEC 42001 proves Cornerstone governs AI responsibly across its entire workforce platform lifecycle.

- Responsible AI is essential as workforce platforms increasingly shape careers, skills, and opportunity.

- Certification builds trust, reduces risk, and differentiates AI-driven platforms beyond pure innovation.

In December 2025, Cornerstone announced that Cornerstone Galaxy achieved ISO/IEC 42001 certification, the global standard for Responsible AI. Published as the world’s first certifiable AI management system standard in December 2023, ISO/IEC 42001 is designed to help organizations balance rapid AI innovation with responsible governance. It has become increasingly critical, as artificial intelligence (AI) becomes deeply embedded in workforce development platforms, shaping how people learn, build skills, and progress in their careers.

While AI enables unprecedented scale and personalization, it also introduces specific risks, including bias, lack of transparency, system failures, and unintended harm to individuals. For organizations that rely on AI-driven workforce development platforms, these risks are not theoretical; they have real consequences for people and organizations alike.

As a leading global workforce development platform, Cornerstone sees firsthand the growing impact of AI on careers and opportunities. This makes Responsible AI governance not only a business priority, but also a broader societal imperative.

This article explains:

- What is ISO/IEC 42001 certification and what does it take to obtain it?

- Why is Responsible AI now essential for workforce development platforms?

- Why ISO/IEC 42001 differentiates AI-driven workforce development platforms?

- Why does ISO/IEC 42001 matter for organizations using workforce development platforms?

- The top 10 challenges ISO/IEC 42001 helps overcome

- The 5 benefits of ISO 42001 for workforce platforms

- Why having the ISO 42001 makes an organization stand out

What is ISO/IEC 42001 certification?

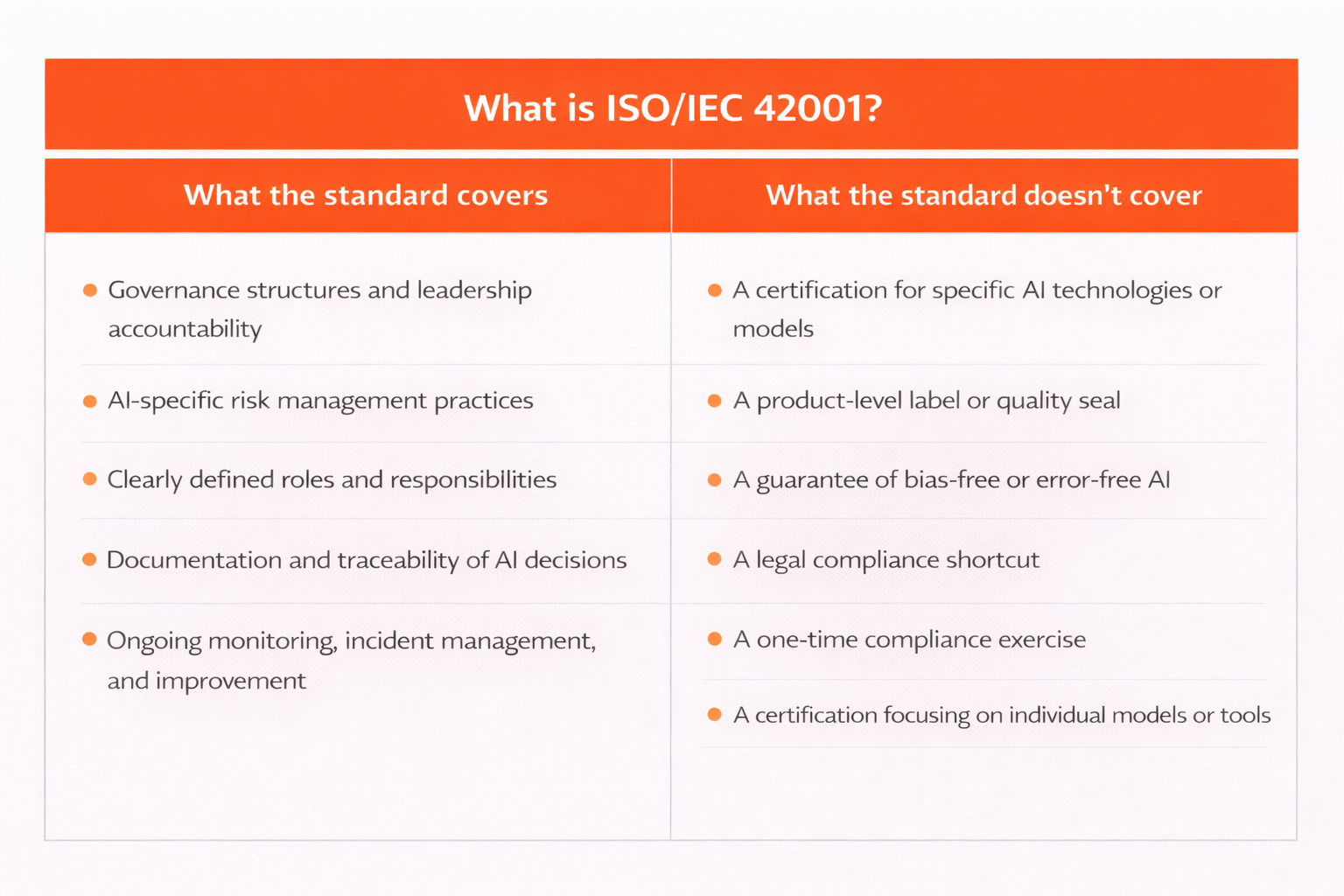

ISO/IEC 42001 is the world's first certifiable international standard for Artificial Intelligence Management Systems (AIMS). It establishes a comprehensive governance framework for how organizations design, deploy, operate and monitor AI systems across their lifecycle, ensuring transparency, accountability, ethical use, and continuous risk management.

The standard helps enterprises demonstrate accountability and align with emerging regulations, including:

What is an AI Management System (AIMS)?

An Artificial Intelligence Management System (AIMS) is a structured framework for governing the design, deployment, and operation of AI systems across an organization. It ensures that AI is used responsibly, transparently, and in alignment with regulatory and ethical expectations.

An AIMS defines governance structures, manages AI-related risks, enforces compliance, and supports continuous improvement. It also establishes roles, controls, and training requirements to ensure accountability and competence across AI activities.

Key components of the ISO/IEC 42001 certification

ISO/IEC 42001 defines the essential components required to establish and maintain an effective AI Management System:

- AI Management System (AIMS): A structured framework governing AI systems, data, and AI-related processes throughout their lifecycle

- AI Risk Management: Identification, assessment, and mitigation of risks such as bias, accountability gaps, data protection issues, and regulatory exposure

- Ethical and Responsible AI: Principles ensuring transparency, fairness, accountability, and responsible use

- Quality and Reliability: Controls to ensure robustness, accuracy, and reliability of AI systems and data

- Continuous Monitoring and Improvement: Ongoing evaluation of AI performance, governance effectiveness, and risk exposure

- Stakeholder Involvement and Governance: Engagement of relevant stakeholders to support informed decision-making and oversight

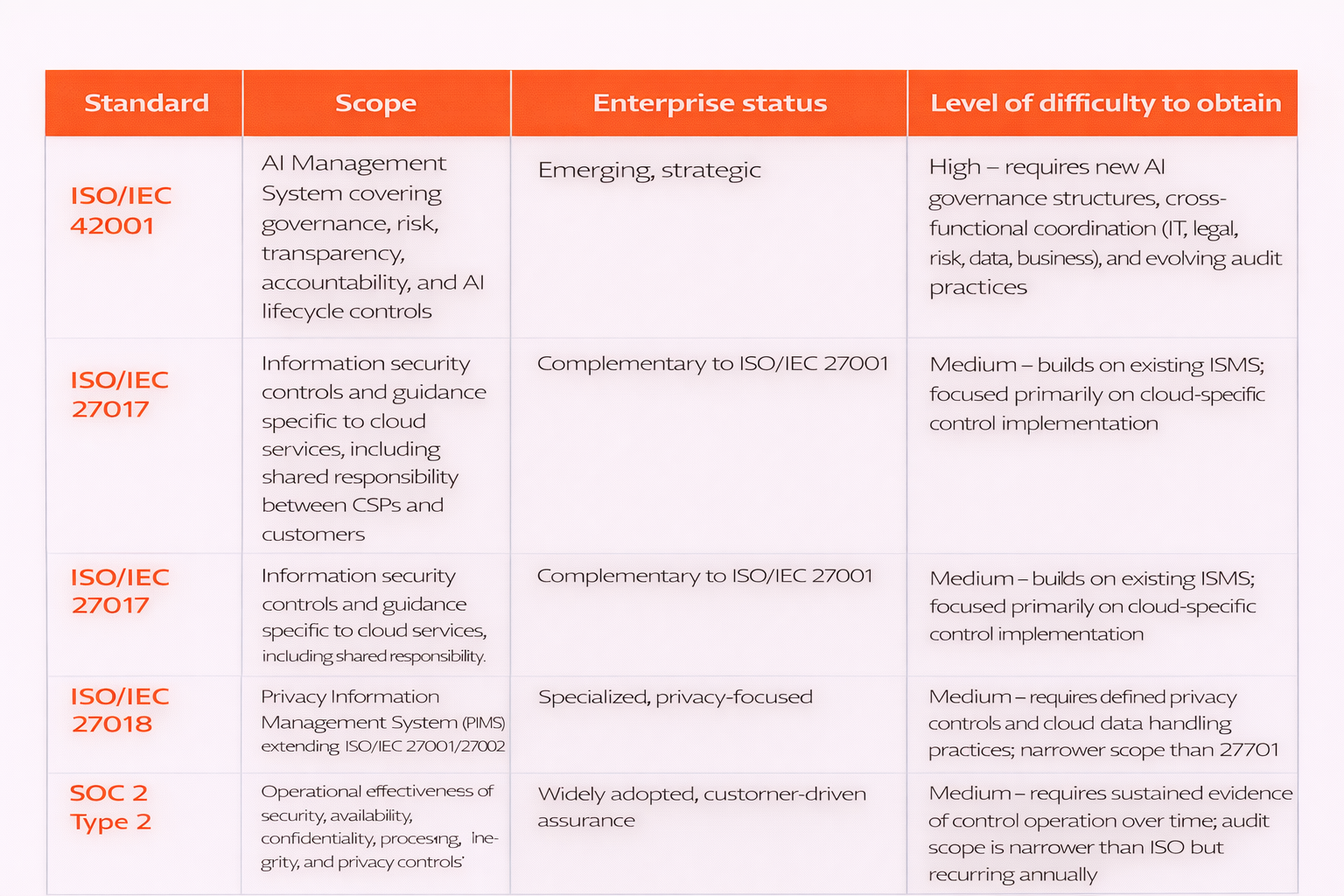

How ISO/IEC 42001 works with other security and privacy certifications

ISO/IEC 42001 is the latest addition to a family of enterprise governance frameworks that includes:

- ISO/IEC 27001 defines how an organization secures its information systems by establishing an Information Security Management System (ISMS).

- ISO/IEC 27701 extends information security into privacy by adding personal data protection controls to ISO/IEC 27001.

- ISO/IEC 27017 defines security controls and implementation guidance specifically for cloud services, clarifying shared security responsibilities between cloud service providers and cloud customers.

- ISO/IEC 27018 focuses on the protection of personally identifiable information (PII) in public cloud environments, establishing privacy principles and controls for cloud service providers acting as PII processors.

- ISO/IEC 27701 extends ISO/IEC 27001 and ISO/IEC 27002 to create a Privacy Information Management System (PIMS), enabling organizations to manage privacy risks and comply with data protection regulations as PII controllers and/or processors.

- ISO/IEC 42001 establishes an AI Management System to govern AI responsibly across its lifecycle, addressing transparency, accountability, and risk.

Security, privacy, and cloud standards such as ISO/IEC 27001, ISO/IEC 27701, ISO/IEC 27017, ISO/IEC 27018, and SOC 2 Type II form a foundational governance layer for protecting data and systems. ISO/IEC 42001 builds on this foundation by introducing AI-specific governance, addressing risks related to AI lifecycle management, transparency, accountability, and human oversight. Together, these standards create a layered governance ecosystem that strengthens enterprise risk management as organizations adopt AI.

What it takes to get ISO/IEC 42001 certified

Achieving ISO/IEC 42001 certification is a rigorous, enterprise-wide process, comparable in scope and discipline to established management systems standards such as ISO/IEC 27001.

Certification is granted following a comprehensive independent audit conducted by an accredited conformity assessment body (CAB). The audit assesses whether an organization’s AI Management System fully conforms to the requirements defined by the standard and is effectively implemented across the organization.

To be certified, organizations must demonstrate operationalized controls across all relevant clauses and annexes, including:

- AI governance and leadership accountability

- AI-specific risk management

- Transparency and documentation of AI decisions

- Bias mitigation and human oversight

- Continuous monitoring, incident management, and improvement

This certification is valid for three years, with annual surveillance audits to verify ongoing compliance.

Why is Responsible AI governance now essential for workforce development platforms?

A workforce development platform uses technology, increasingly driven by AI, to help enterprises upskill employees, identify and validate skills, support career mobility, and align talent development with business strategy.

As the new workforce where Humans + AI enhance each other is a key differentiator for organizations, these platforms play a vital role in organizations’ resilience, agility, and productivity. As AI increasingly shapes learning, skills, and career mobility, it brings heightened responsibility.

Rapid increase in AI incidents

- Documented AI safety incidents rose from 149 in 2023 to 233 in 2024, a 56.4% year-on-year increase (Stanford AI Index, 2025, Stanford University Responsible AI, 2025).

- These incidents include flawed content moderation, unsafe automation, election misinformation, and privacy violations (Stanford AI Index, 2025).

- The AI Incident Database tracks over 1,200 real-world incidents, with many more likely unreported (MIT AI Risk Initiative, 2025)

AI-enabled cyber risks

- Phishing volumes increased 202% in late 2024 due to AI-generated content (Tech Advisors, 2025).

- Credential phishing attacks surged 703% (Tech Advisors, 2025).

- 82.6% of phishing emails now use AI (Tech Advisors, 2025).

- AI-generated phishing saw 78% open rates and 21% click-throughs (Tech Advisors, 2025).

- Deepfakes now represent 6.5% of fraud attacks, up 2,137% since 2022 (Tech Advisors, 2025).

- In one high-profile case, a firm lost $25 million to a deepfake CFO scam (Tech Advisors, 2025).

AI trust and risk perception

- 78% of organizations use AI, yet many worry about it: 64% cite accuracy concerns, 63% regulatory compliance risks, and 60% cybersecurity issues (Stanford AI Index, 2025).

- Public trust in AI companies has declined from 50% to 47% amid rising incidents (Stanford AI Index, 2025).

Why ISO/IEC 42001 differentiates AI-driven workforce development platforms

Responsibility as a true differentiator

In a market where nearly every organization claims to be “AI-driven,” technological capability alone is no longer enough. Responsibility has become the real differentiator, especially for customers affected by AI-driven decisions.

A signal of seriousness and rigor

ISO/IEC 42001 is not a symbolic certification. It requires enterprise wide commitment, , cross-functional governance, documented controls, and independent audits. For customers, this level of rigor is a clear signal that Responsible AI practices are emebedded into operations and not treated as an afterthought or aspiration.

Building trust in invisible systems

AI systems often operate behind the scenes, making decisions that users cannot easily see or understand, ISO/IEC 42001 provides reassurance by demonstrating that:

- AI decisions are governed, documented, and continuously monitored

- Risks are proactively identified, assessed, and mitigated

- Ethical principles are embedded into daily operations

- Independent audits verify accountability and oversight

Stability in a fast-moving AI landscape

As AI models evolve, vendors change, and automation accelerates, trust can become fragile. ISO/IEC 42001 provides stability, transparency, and assurance amid constant technological change.

Why does ISO/IEC 42001 matter for organizations using workforce development platforms?

For workforce development platforms where artificial intelligence (AI) directly influences skills development, career mobility, and workforce decisions, Responsible AI governance is essential. ISO/IEC 42001 provides a globally recognized AI governance standard that helps organizations ensure their AI systems are governed, transparent, and accountable across the full AI lifecycle.

By establishing an AI management system, ISO/IEC 42001 enables workforce platforms to balance innovation with responsibility, protecting both individuals and organizations from unintended harm while building long-term trust in AI-driven outcomes.

Impact on employees and learners

For employees and learners, ISO/IEC 42001 contributes to ensuring that AI-driven workforce development systems are not only effective, but fair, transparent, and trustworthy. Specifically, the standard helps ensure that AI-driven platforms:

- Support non-biased access to learning, skills development, and career opportunities

- Provide transparent and explainable AI-based recommendations for skills, roles, and career pathways

- Reduce bias in assessments, talent matching, and advancement decisions

- Protect individuals from unintended or long-term harm as AI influences employability and career progression

Impact on organizations

For organizations using AI-enabled workforce development platforms, ISO/IEC 42001 helps ensure that AI systems:

- Empower leaders with reliable, trustworthy recommendations to improve productivity, talent planning, and workforce strategy.

- Support consistent AI governance as models evolve and third-party vendors change

- Strengthen confidence in AI systems used to assist in critical business and people-related decisions

What ISO/IEC 42001 looks like in practice: Cornerstone

Cornerstone OnDemand is among the first workforce development platforms globally to achieve ISO/IEC 42001 certification. This milestone reflects a sustained, independently verified commitment to Responsible AI governance, transforming ethical principles into measurable, auditable practice rather than aspirational intent.

“Responsible AI governance is quickly becoming a prerequisite, not a differentiator, for HR technology buyers,” said Stacey Harris, Chief Research Officer and Managing Partner at Sapient Insights Group. “In our research, more than a quarter of organizations cite data quality and governance as their top barrier to adopting AI, which tells us trust remains fragile. Achieving ISO/IEC 42001 is an important signal that Cornerstone is focusing on AI operational accountability, giving organizations greater confidence that AI-driven workforce decisions are governed, transparent, and designed to scale responsibly.”

Cornerstone’s approach to ISO/IEC 42001 is operationalized through its Framework for Responsible and Ethical AI, which defines how AI systems are designed, governed, monitored, and continuously improved across the platform. The framework embeds accountability, risk management, transparency, and human oversight into day-to-day operations, ensuring Responsible AI is applied consistently as technologies evolve.

For organizational customers, this provides:

- Clear governance and accountability through defined leadership oversight of AI systems

- Structured assessment of AI-related risks, including bias, safety, and unintended impacts

- Continuous monitoring and improvement of AI performance over time

- Independent validation of Responsible AI governance through external audits

- Reduced vendor risk during procurement, compliance reviews, and audits

- Greater confidence in AI-driven learning, skills, and career decisions

- Scalable AI governance that evolves alongside organizational needs and advancing technologies

The top 9 Challenges ISO/IEC 42001 helps overcome

ISO/IEC 42001 translates Responsible AI from principles into practice. It addresses many of the most common and persistent challenges organizations face as AI adoption accelerates:

1. Lack of control over AI systems: Without governance, AI initiatives are fragmented, undocumented, and poorly owned. ISO/IEC 42001 establishes clear accountability, governance structures, and documentation so AI systems become managed assets rather than “black projects.”

2. Unmanaged AI risks: Risks such as bias, hallucinations, and unsafe outputs often go unchecked. ISO/IEC 42001 introduces structured risk assessments across the AI lifecycle, reducing surprises and strengthening defensibility.

3. Regulatory uncertainty: Organizations struggle to interpret evolving regulations. ISO/IEC 42001 provides a regulatory-aligned framework and evidence of due diligence during audits or investigations.

4. Third-party AI risks: External models, tools and vendors introduce hidden risks. ISO/IEC 42001 integrates supplier risk management into AI governance.

5. Inconsistent AI practices: Different teams build AI differently. The standard creates shared policies and lifecycle practices across the organization.

6. Lack of ongoing monitoring: AI systems can degrade or drift after deployment. ISO/IEC 42001 mandates continuous monitoring, incident response, and controlled updates.

7. Ethical AI without operational governance: Ethical principles often lack operational meaning. ISO/IEC 42001 embeds ethics into governance, training, and accountability.

8. Loss of stakeholder trust: Public trust in AI companies declined from ~50% to 47% as incidents increased (Stanford AI Index 2025). Certification demonstrates credible commitment to Responsible AI.

9. Difficulty scaling AI responsibly: AI works in pilots but breaks at scale. ISO/IEC 42001 enables scalable governance using continuous improvement (Plan–Do–Check–Act).

The 5 benefits of ISO 42001 for workforce platforms

ISO/IEC 42001 provides practical, business-relevant value that extends beyond compliance, particularly for workforce platforms where AI directly influences people, skills and careers

1. Responsible AI use: The standard translates ethical principles into operational controls. Workforce platforms can demonstrate that AI systems influencing hiring, learning, and career mobility are governed, monitored, and improved, not deployed blindly.

2. Stronger reputation and trust: Certification provides independent validation that AI is managed responsibly. For customers, partners, and end users, ISO/IEC 42001 serves as a credible signal that AI decisions affecting people are taken seriously and handled with care.

3. Regulatory alignment: ISO/IEC 42001 aligns with emerging global regulations such as the EU AI Act and U.S. AI initiatives. It helps workforce platforms stay ahead of regulatory change and demonstrate due diligence during audits, assessments, or customer reviews.

4. Practical risk management: The standard introduces lifecycle-based risk management tailored to AI — covering bias, data quality, model drift, third-party tools, and unintended impacts — reducing operational, legal, and reputational risk.

5. Innovation within a structured framework: Rather than slowing innovation, ISO/IEC 42001 enables organizations to scale AI safely. Clear governance, shared practices, and continuous improvement allow teams to innovate faster without increasing risk.

Conclusion

Artificial intelligence is reshaping how people learn, grow and work, but trust in AI remains fragile. As related incidents increase and regulatory expectations continue to evolve, Responsible AI governance has become a business-critical requirement, especially where AI influences people and careers.

ISO/IEC 42001 provides a structured, auditable framework for governing AI responsibly at scale, embedding accountability, transparency, and continuous improvement into everyday AI operations.

This is why Cornerstone has achieved ISO/IEC 42001 certification, to operationalize Responsible AI governance across its workforce development platform and ensure that people remains at the center of AI-driven learning and career outcomes.

For workforce development platforms, ISO/IEC 42001 represents more than compliance; it is a commitment to trust, responsibility and sustainable value.